Table of Contents

Starting values for covariance components

To run WOMBAT, starting values for all matrices of covariance components are required. A common question is how best to derive these.

- Often we have estimates for the same (or very similar) traits from previous analyses which can be used as guideline. For instance, estimates from different locations or breeds, including literature values.

- The more traits are analysed simultaneously, the more effort to find `good' starting values is appropriate !

A good strategy is to build up matrices of starting values from a series of preliminary analyses.

Univariate analyses

Preliminary, univariate analyses for all traits of interest are highly recommended !

- Univariate analyses are fast and generally converge in a few iterates. (Hint : Use a less stringent convergence criterion than the default)

- Univariate analyses help in checking records for each trait against potential little problems.

- Unless there are specific reasons (such as selection bias), we expect variance component estimates from uni- and multi-variate analyses to be similar. Having univariate estimates thus provides a check on multivariate results, and helps identifying any problems with the latter.

- Univariate analyses are quite robust against the choice of starting values.

Usually we have some expectation of the relative importance of each random effect fitted; e.g. we expect a given trait to have high, medium or low heritability (about 0.5, 0.3 or 0.1). We generally also have an estimate of the `raw' variance for the trait. Assuming the model of analysis explains a certain proportion of variation, we can derive reasonable starting values for the variance components from the remainder and our expectation of the proportion of variance.

Bivariate analyses

The next step to build up a matrices of starting values for multivariate analyses might be a series of bivariate analyses.

- Bivariate analyses again are reasonably fast, converge quickly and are more robust against `bad' starting values than higher-dimensional analyses.

- Suitable starting values for bivariate analyses can be constructed from the univariate estimates of variance components for the two traits and a guess for the values of correlations. Again, a guess (or literature value) on the level (high, medium or low) and direction (positive or negative) of each correlation is generally close enough.

To make life easier, WOMBAT has an option (––subset) which will generate the parameter files for all pairs of bivariate analyses from that for a multivariate analysis involving all traits of interest.

Combining results

WOMBAT provides the facility to combine results from partial analyses involving subsets of traits into pooled matrices of covariance components that are suitable (positive definite) as starting values for higher-dimensional multivariate analyses. This uses the iterative summation of expanded part matrices, suggested by Mäntyssari (1999), and is invoked with the run option −−itsum.

Starting values for random regression analyses

<box 38% round blue right|What is a basis function?> In regression analysis we regress on some functions of our dependent variable. These functions are referred to as basis functions. We have one basis function per regression coefficient. Say we want to fit a linear regression including an intercept: y=b0+b1x. In this case, the basis functions are f0(x)=1 and f1(x)=x. For RR analyses we often fit more complicated, non-linear basis functions, such as orthogonal polynomials (e.g. Legendre) or spline functions. </box>

Random regression (RR) analyses require a guess for the matrices of covariances among random regression coefficients to be specified. These depend on the choice of basis functions. Often there are no `obvious' values to use. As for multivariate analyses, a series of simpler, preliminary analyses is generally worthwhile to help deriving the starting values, carry out additional checks of the data and provide some base values to compare results from RR analyses to.

A possible strategy is to build up covariance matrices for a grid of selected values of the control variable (i.e. the covariable for the RR coefficients, such as age, days in milk, distance), and to use a simple least-squares or ML procedure to estimate covariance functions corresponding to the chosen basis for one matrix at a time.

Building a grid

Take a step backwards: Before RR analyses, we tended to treat all records for values of the control variable in a certain interval as a `trait' and analyzed it as such, possibly with some adjustment for differences in this covariable. This is very useful as a preliminary step for RR analyses. So what you need to do is:

- Define the grid. Subdivide your data into suitable intervals, based on the value of the control variable. In doing so, make sure that your interval is either narrow enough so that all individuals have single records only, or, alternatively, is wide enough so that most individuals have repeated records.

For example, when analysing test day records for dairy cattle, you might have up to 10 records taken at approximately monthly intervals. An obvious subdivision would then be into 10 `traits' corresponding to the test number. - Obtain estimates of covariance matrices. Carry out univariate analyses for all intervals and all pairs of intervals and pool results into valid (positive definite) covariance matrices as described above.

Estimating covariance functions

Starting values for the covariances among random regression coefficients can then be obtained by fitting the required covariance function to the grid of covariances for intervals, using the mean value of the control variable for each interval as point value. This is done for one random effect at a time, and can be done using least-squares, weighted or generalised least-squares or maximum likelihood.

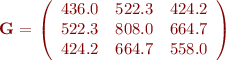

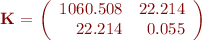

Kirkpatrick et al. (1990) give the following, simple worked example: Consider a grid of 3 values with genetic covariance matrix

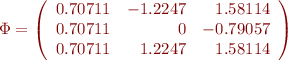

Assume these represent values of the control variable which are simply 1, 2 and 3. The next step depends on our choice of basis function and order of fit. Kirkpatrick et al. (1990) consider Legendre polynomials and a quadratic covariance function, i.e. 3 random regression coefficients. Legendre polynomials are defined for the interval [-1,1]. Hence, the first step is to transform the values of the control variable to this range. This gives -1, 0 and 1.

Next we need to evaluate the basis function (quadratic Legendre polynomial) for these three points. This gives

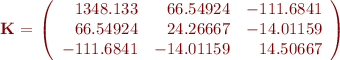

The matrix of covariances among RR coefficients can then be obtained by solving  for K. For the example, this gives

for K. For the example, this gives

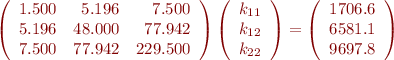

Fitting 2 RR coefficients only, we would truncate  to its first two columns. This would give least-squares equations

to its first two columns. This would give least-squares equations

and estimate

A simple Fortran 95 program to perform these example calculations can be downloaded: here.

You can read more about Legendre polynomials (including formulae on how to evaluate them and further links) at Legendre_polynomials. Note that the Legendre polynomials used by Kirkpatrick et al. (1990) differ by a scale factor from those given in some other references.

Another popular choice are spline functions, in particular B-splines.

WOMBAT has Legendre polynomials (scaled as above) and equi-distant B-splines as in-built options, and can accommodate other choices through the USR option.

References

<bibtex> @article{beal1991,

author = {Beal, Stuart L.},

title = {Miscellanea: Computing initial estimates with mixed

effects models: A general method of moments},

journal = Biometrika,

volume = 78,

number = 1,

pages = {217--220},

doi = {10.1093/biomet/78.1.217},

year = 1991,

}

@ARTICLE{kirk90,

author = {Kirkpatrick, M. and Lofsvold, D. and Bulmer, M.},

pages = {979--993},

title = {Analysis of the inheritance, selection and evolution of growth trajectories},

journal = {Genetics},

volume = {124},

year = {1990}

}

</bibtex>